A new way of programming will better match the fidelity of implementation and the savings of time and energy in future computers.

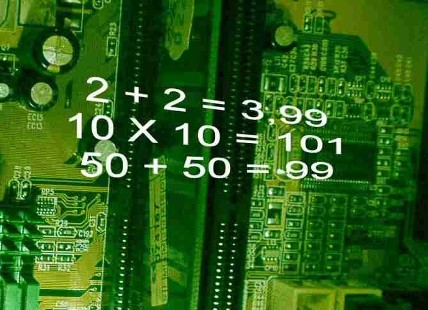

As transistors become smaller, also become less reliable. So far, chip designers have been able to solve that problem, but in the future this trend to instability could make computers stop improving the pace at which we are accustomed. A third possibility, that some researchers have begun to value, is just let our computers make more mistakes in tasks for which the accuracy is not essential.

If, for example, incorrectly decoded some pixels in each frame of a high definition video, viewers probably will not notice, and instead that relaxation of the requirement of perfect decoding could generate gains in speed or energy efficiency.

Anticipating to the needs that arise when starting chips was inaccurate, the team Martin Rinard, Michael Carbin and Sasa Misailovic, in the Laboratory of Computer Science and Artificial Intelligence (CSAIL) under the MIT in Cambridge, USA, has developed a new programming framework that allows software developers to specify when errors can be tolerated.

Probably chipmakers use components that are not entirely reliable in accuracy for some applications in the near future. It is tempting in this way increase the overall efficiency in hardware.